ChatGPT Agent Review: smarter assistant or overhyped feature?

OpenAI promises an AI that can research, reason, and take action. But after testing it myself, I found that the reality is a bit more complicated.

Ever since OpenAI announced ChatGPT Agents, I had been waiting for it to show up in my tools menu.

They described it as a model that could use a virtual computer to get things done for you. It could browse the internet, complete tasks, and act more like a real assistant. It sounded like a big step forward.

So when it finally became available, I had one big question in mind:

Could ChatGPT actually complete real-world tasks like booking a flight, ordering flowers, finding a local repair shop, or canceling a subscription, all by itself?

To find out, I tested ChatGPT Agent to see if it lived up to the idea.

And honestly? After trying a variety of tasks, I wasn’t blown away. A few things were interesting, but most weren’t.

OpenAI has surprised me many times in the past with what it’s released. But this one didn’t quite land for me.

Let’s take a closer look.

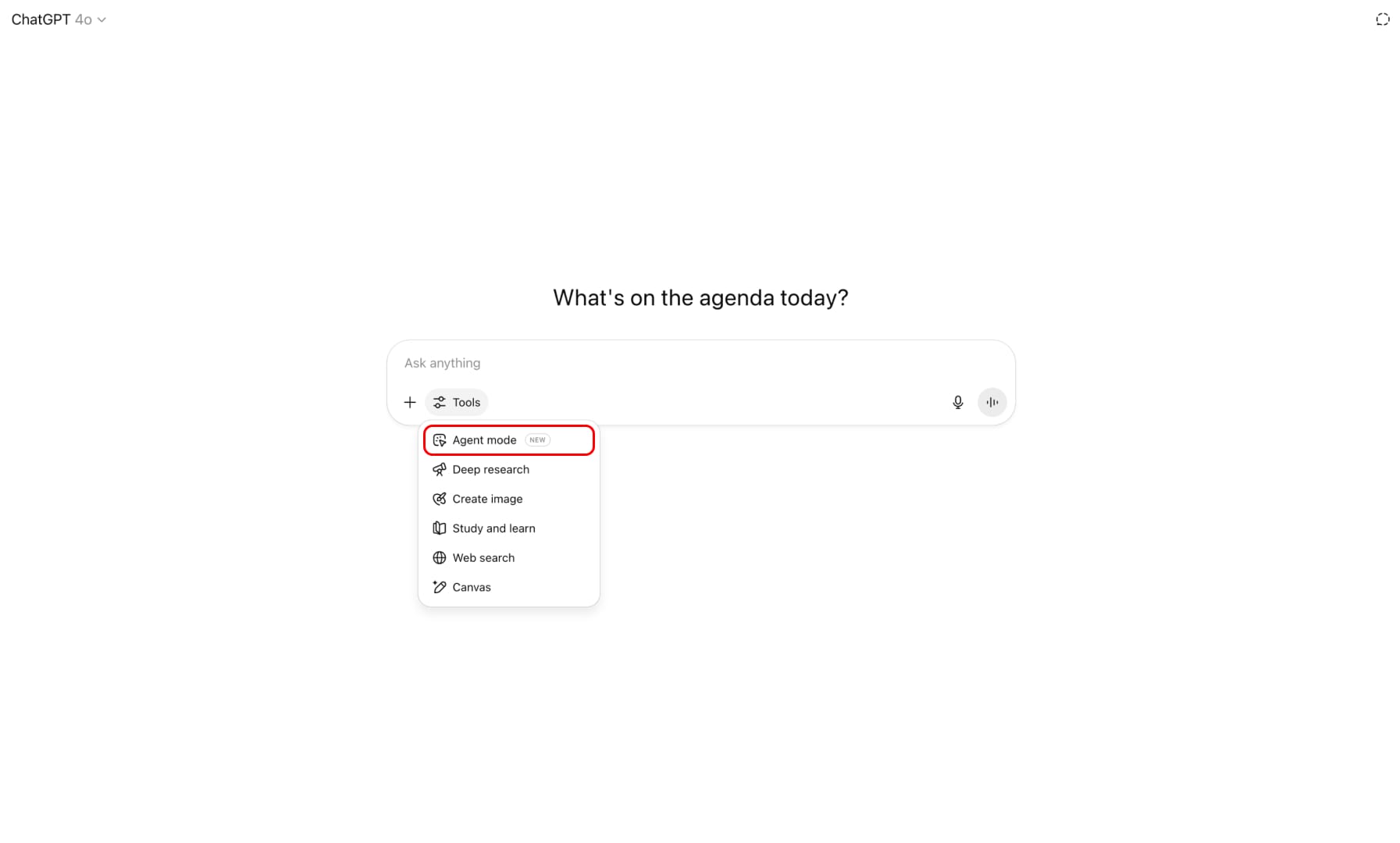

How to turn on Agent Mode in ChatGPT?

ChatGPT’s Agent Mode is now live for all Plus and Team users. It was first released for Pro accounts but is now rolling out more widely.

Once it’s available on your account, you’ll see Agent Mode under the “Tools” tab.

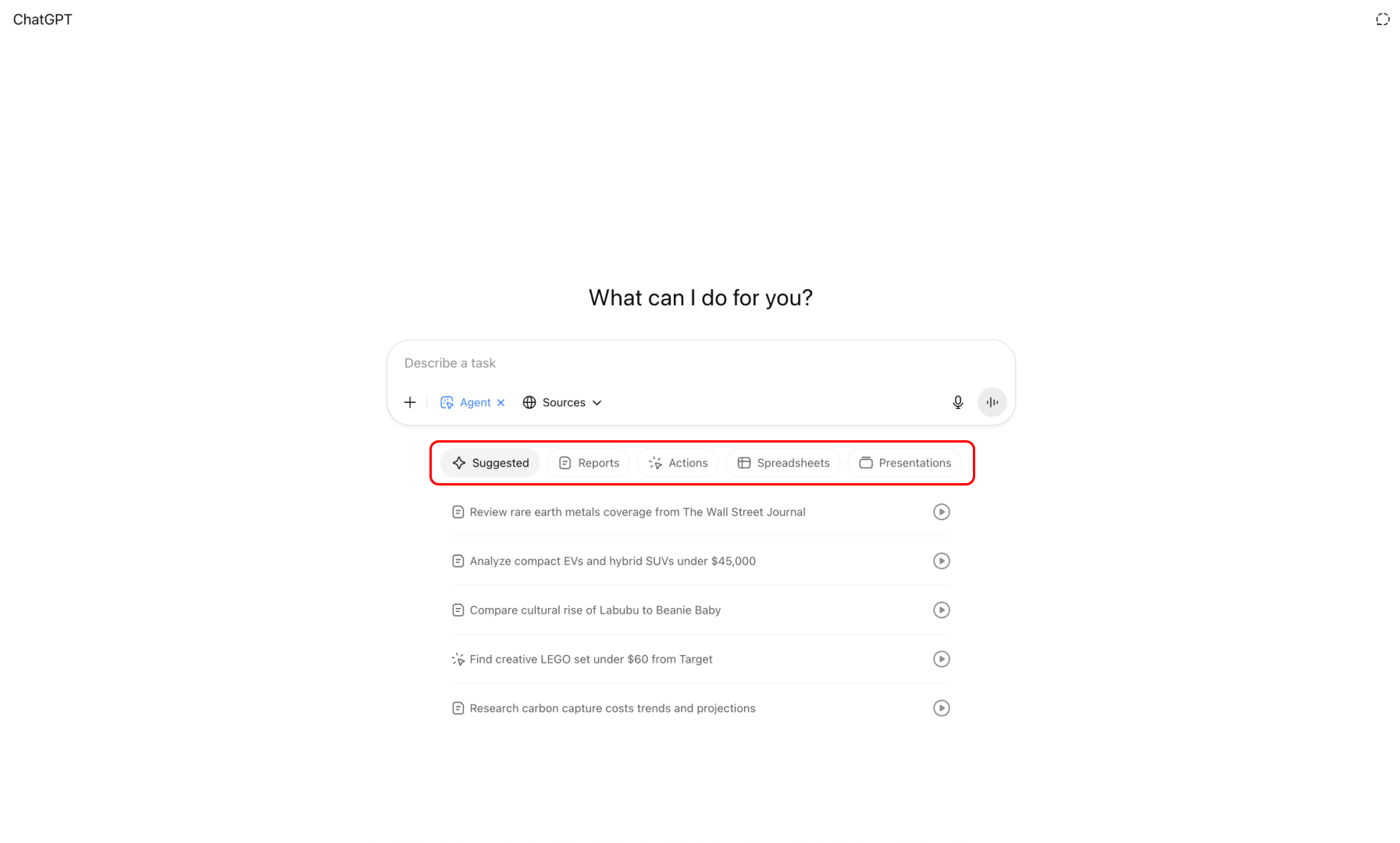

Here is a thing I like is how ChatGPT makes it easy to get started.

With many AI tools, especially ones labeled as agents, it’s often not clear what you’re supposed to do. They sound powerful, but without direction, it’s easy to feel stuck.

ChatGPT avoids that problem by organizing Agent tasks into five simple categories:

1. Suggested

These are sample tasks you can try right away. It’s perfect for exploring what the Agent can do without needing your own idea first.

2. Reports

Use this for summaries, overviews, or pulling together insights from multiple sources. Great for things like market research or trend analysis.

3. Actions

These tasks involve the Agent doing real things for you. That could be booking something, finding a service, or submitting a form. This is where the “do it for me” part comes to life.

4. Spreadsheets

This is all about helping you create or work with spreadsheets. Whether it’s automation or data cleanup, this is meant for organizing information more efficiently.

5. Presentations

This helps you turn your notes or ideas into slides. If you need a quick draft or want to build a deck without starting from scratch, this is where to go.

To put it simply, Agent Mode feels like a mix of ChatGPT DeepResearch and Operator features.

- DeepResearch focuses on digging into topics, pulling together insights from various sources and synthesizing them.

- Operator is more about taking action for you, like filling out forms or following steps on websites.

Agent Mode brings both of those together.

It can research, think, reason, and then actually take action.

I tried a mix of the built-in suggestions and my own ideas. In the next section, I’ll walk through some of the tasks I tried and how it played out.

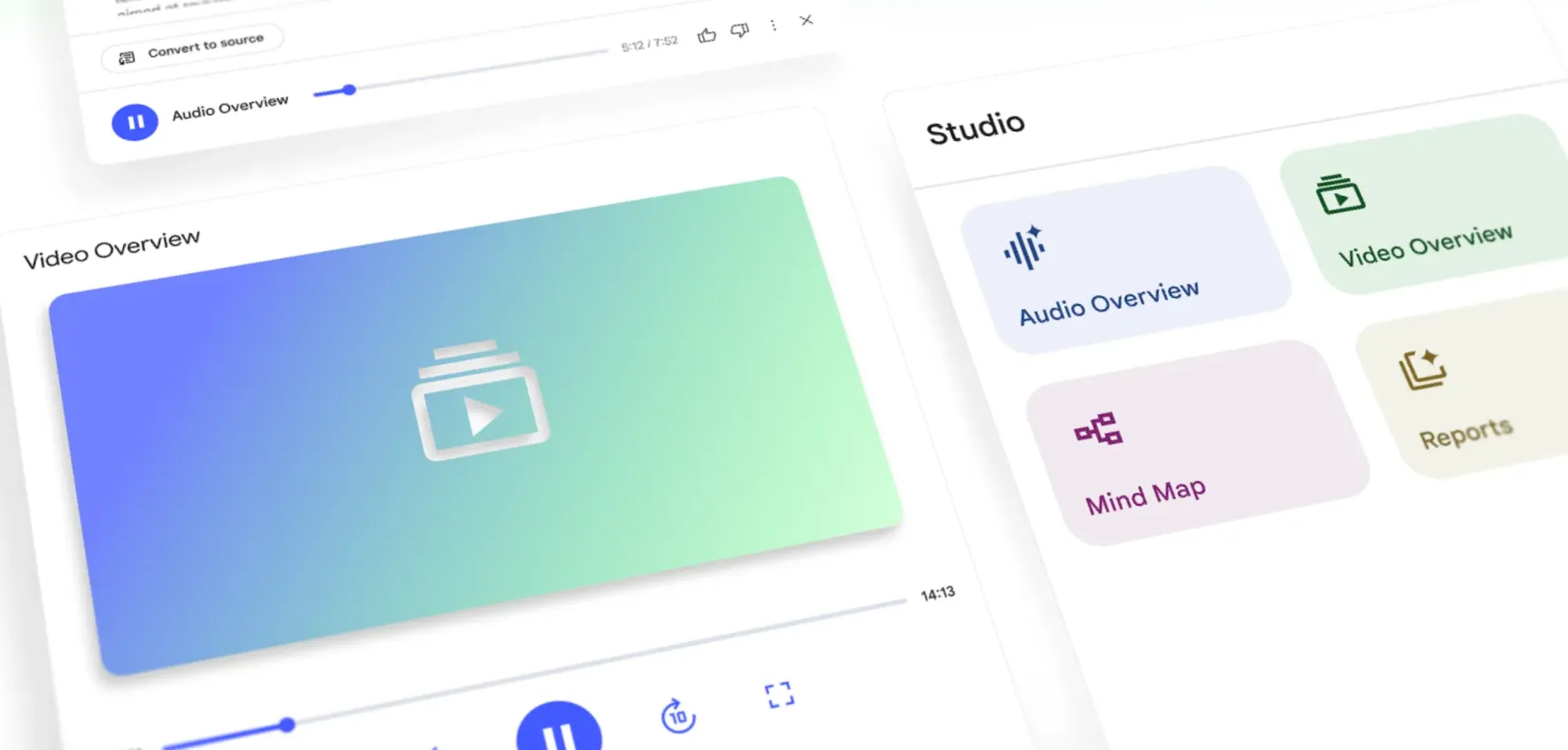

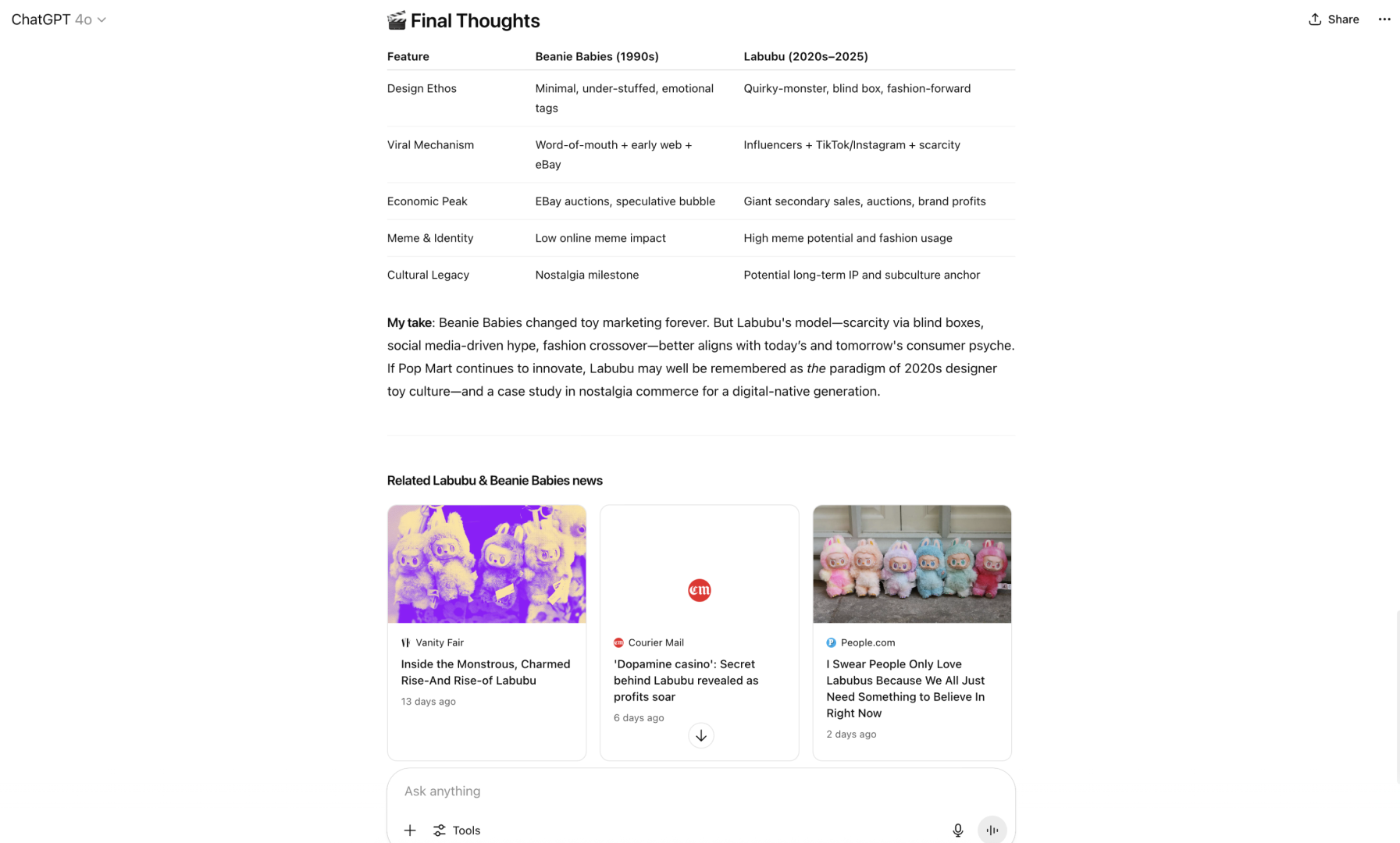

Test 1: Compare Labubu vs. Beanie Babies

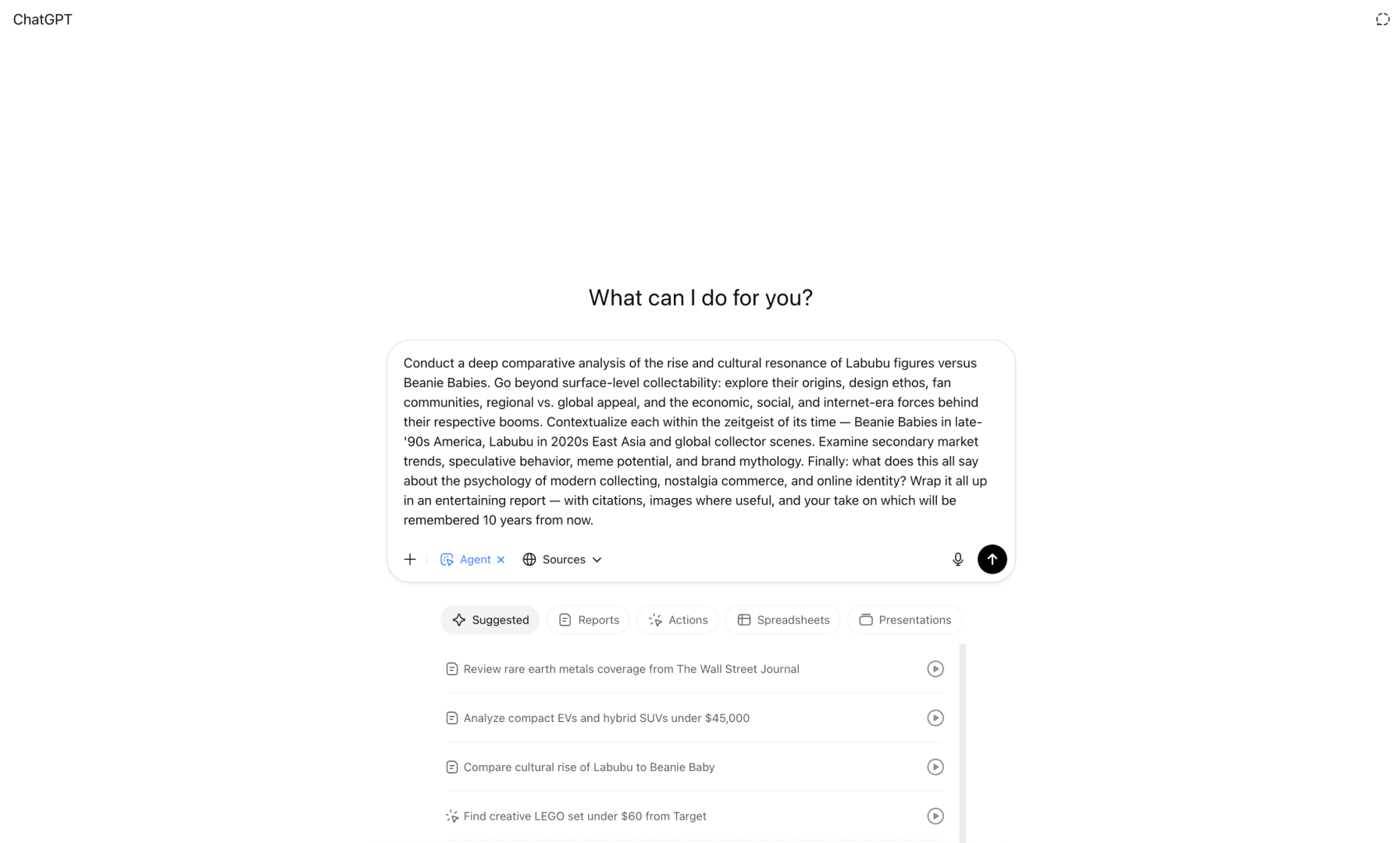

One of the suggested prompts sounded fun:

”Compare the rise and cultural impact of Labubu vs. Beanie Babies.”

So I gave it a shot.

As soon as I submitted it, things worked a bit differently than usual. The Agent started to set up a virtual computer. It is basically a cloud browser it can control on its own.

Once the virtual computer is ready, you can actually see what the Agent is doing in real time. It shows you a live screen of everything it’s doing like clicking around, scrolling through pages, and collecting info.

There’s also a little menu in the top right. Click it, and you’ll see a list of its actions. You can switch between the desktop view and list view anytime. You can also stop the task or take over manually if you want.

We’ll talk more about that “take over” option later. But in short: the Agent will ask you to take over if it needs login info or payment details. You can also take over at any time, just because you feel like it.

After about 15 minutes, the Agent gave me its answer. It was a huge block of text, like an essay. (The actual text was much longer than what’s shown in the image.)

It explained the history of both Labubu and Beanie Babies and listed all the sources it used.

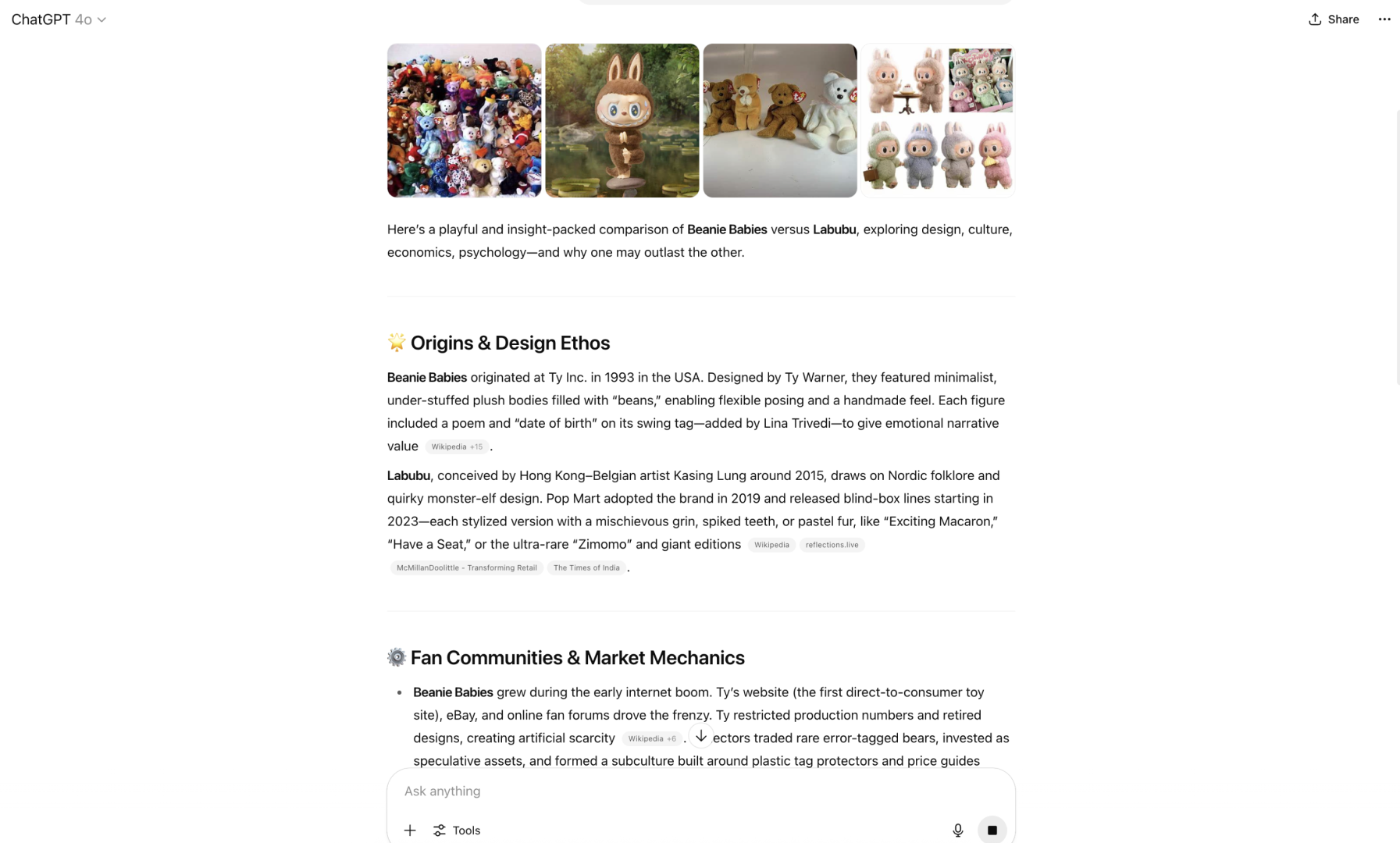

At first, it seemed fine. But I was curious: what would regular ChatGPT do with the same prompt? So I gave it a try.

It took only 3 seconds.

In that time, ChatGPT gave me a neat table, complete with the key points and even images. It was fast, clear, and easy to skim.

The Agent? Took 15 minutes. Gave me a giant wall of text. Honestly, it was kind of hard to read, and slower to get the info I actually wanted.

So… was it helpful? Sure.

But was it better than regular ChatGPT? Not even close.

This was the first time I felt like Agent Mode was trying too hard to solve a problem that didn’t really need solving.

But I kept going.

And in the next test, things got more interesting.

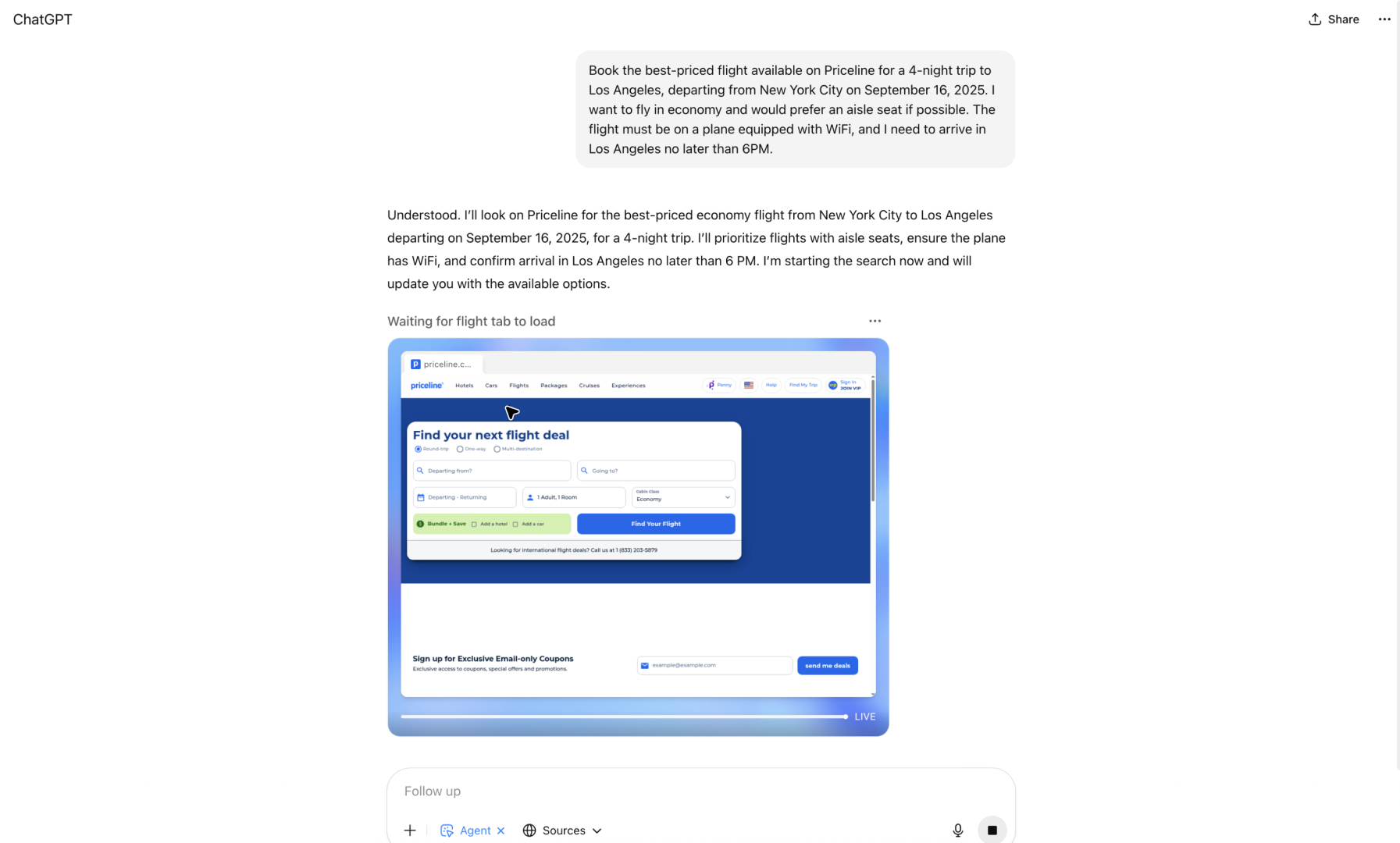

Test 2: Booking a hotel with agent mode

Another built-in Action prompts was:

“Book a best-priced flight on Priceline.”

Seemed like a good way to test how well Agent handles a multi-step task: searching, filtering, and booking.

I gave it a few details:

- Travel dates

- Arrival time

- Wifi preference

Agent launched a virtual browser, opened Priceline.com, and started working through the process.

Just like before, you can watch everything it does live. Or switch to the list view to see the steps one by one.

Here’s what happened:

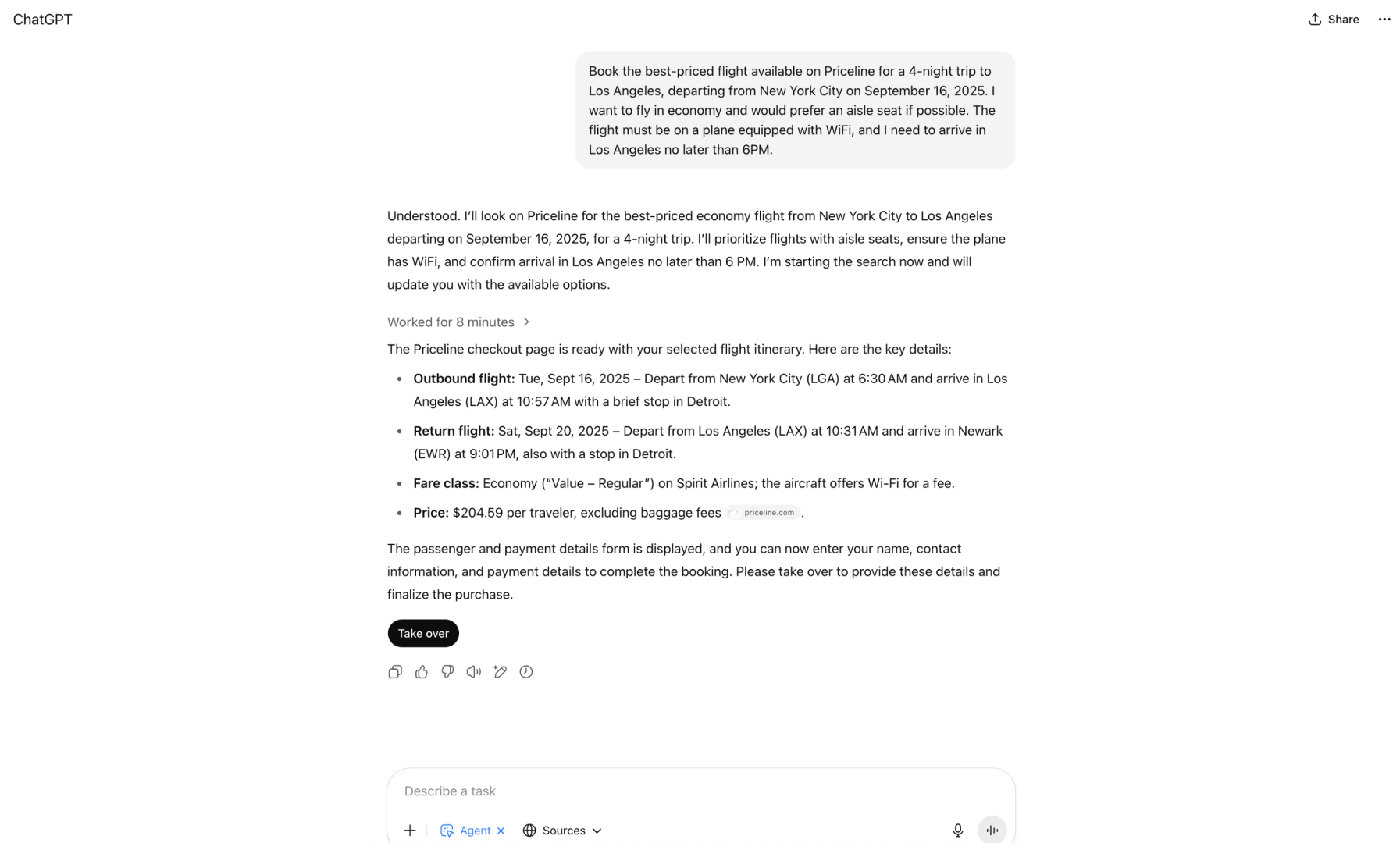

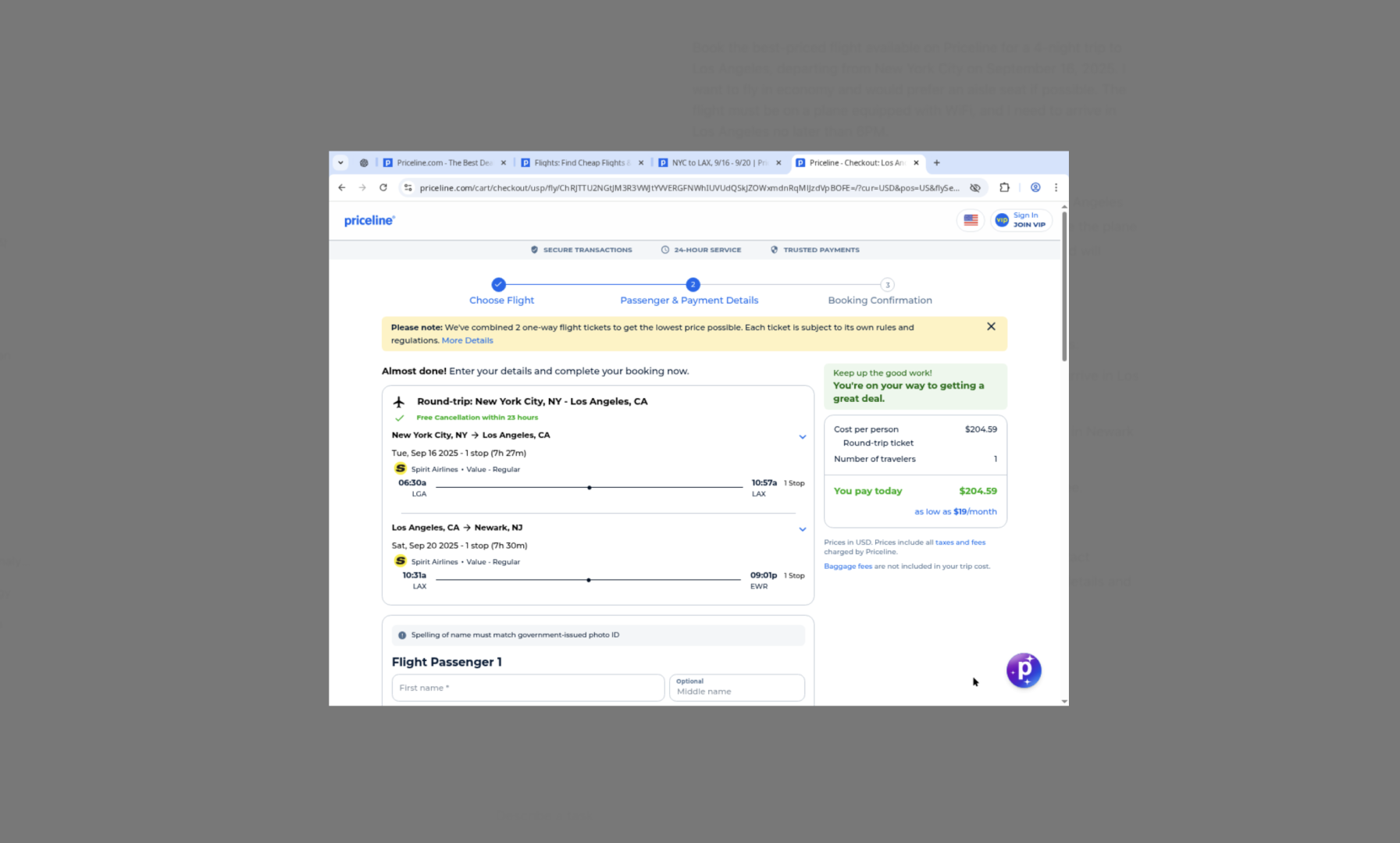

It took 8 minutes to give me one flight option with an option to take over and complete the task.

I ran the same search manually on Priceline.com, using filters.

It took me 50 seconds.

You know what’s even worse? The link the Agent gave me wasn’t even clickable. It was just a screenshot of the booking page.

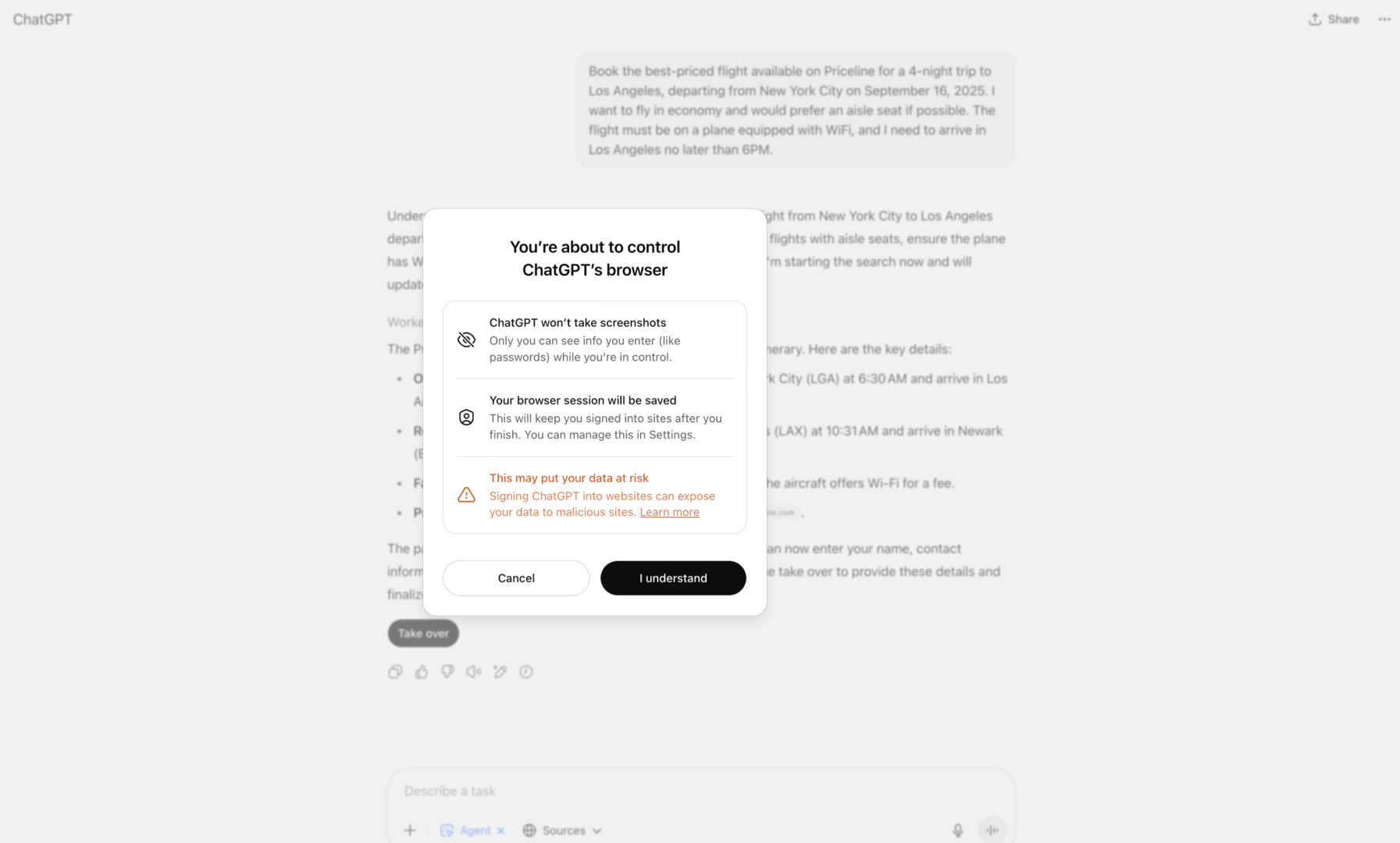

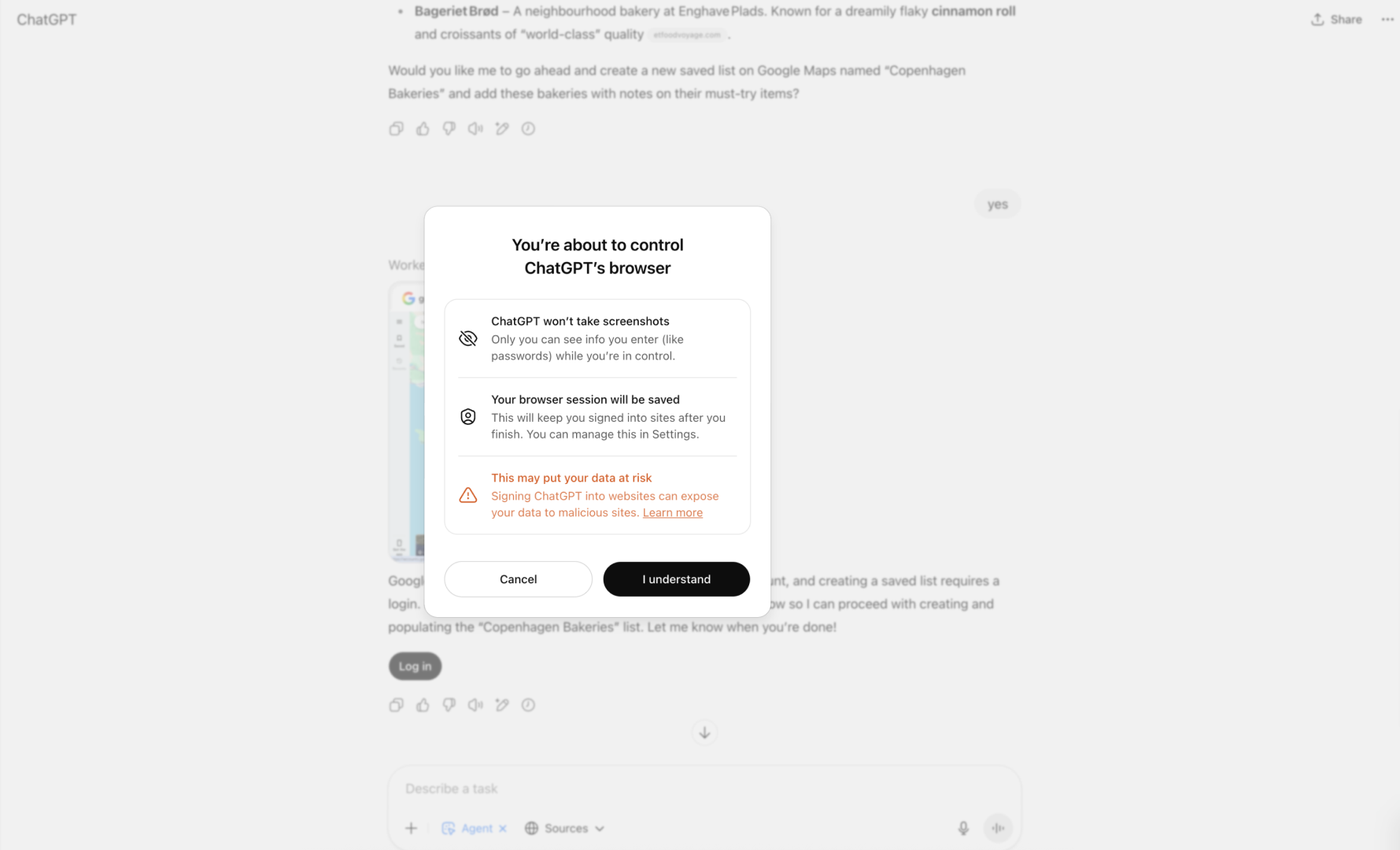

💡 Important heads-up about security:

When the Agent hits a login or checkout page, it stops and asks you to “Take over.” This makes sense. It’s a virtual browser running on OpenAI’s servers, not your own computer.

But there’s also a warning:

“Signing ChatGPT into websites can expose your data to malicious sites.”

That’s a good reminder. And honestly? I appreciate the transparency.

But it also makes me ask: why would I use Agent for this at all?

I can do the task 10 times faster on my own, with no weird login warnings, and I get a working link. In that case, Agent just feels like extra effort for no real benefit.

For now, it’s hard to justify using Agent for simple everyday tasks like booking a flight or hotel. It’s slower, doesn’t always get the job done correctly, and it's not safe.

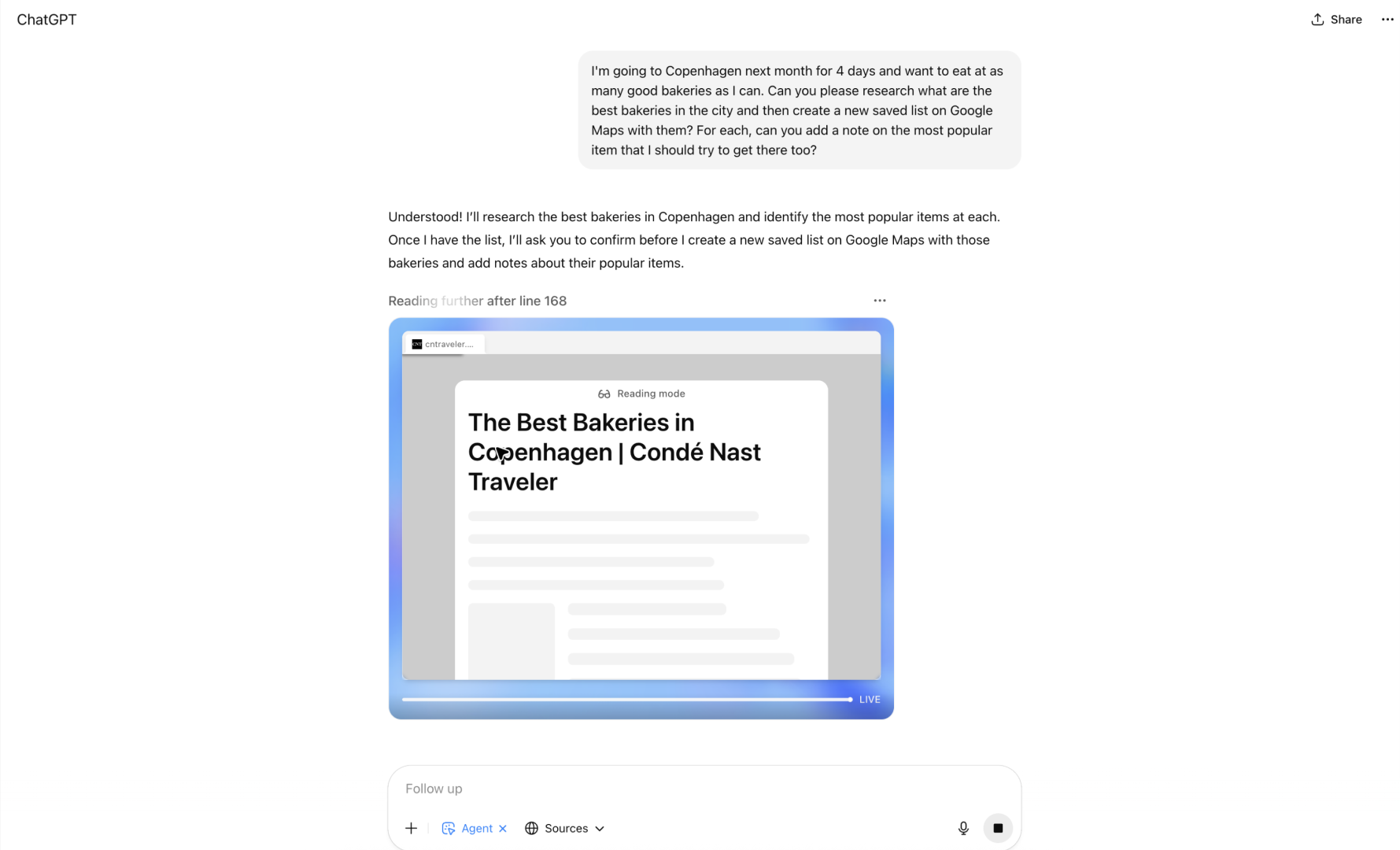

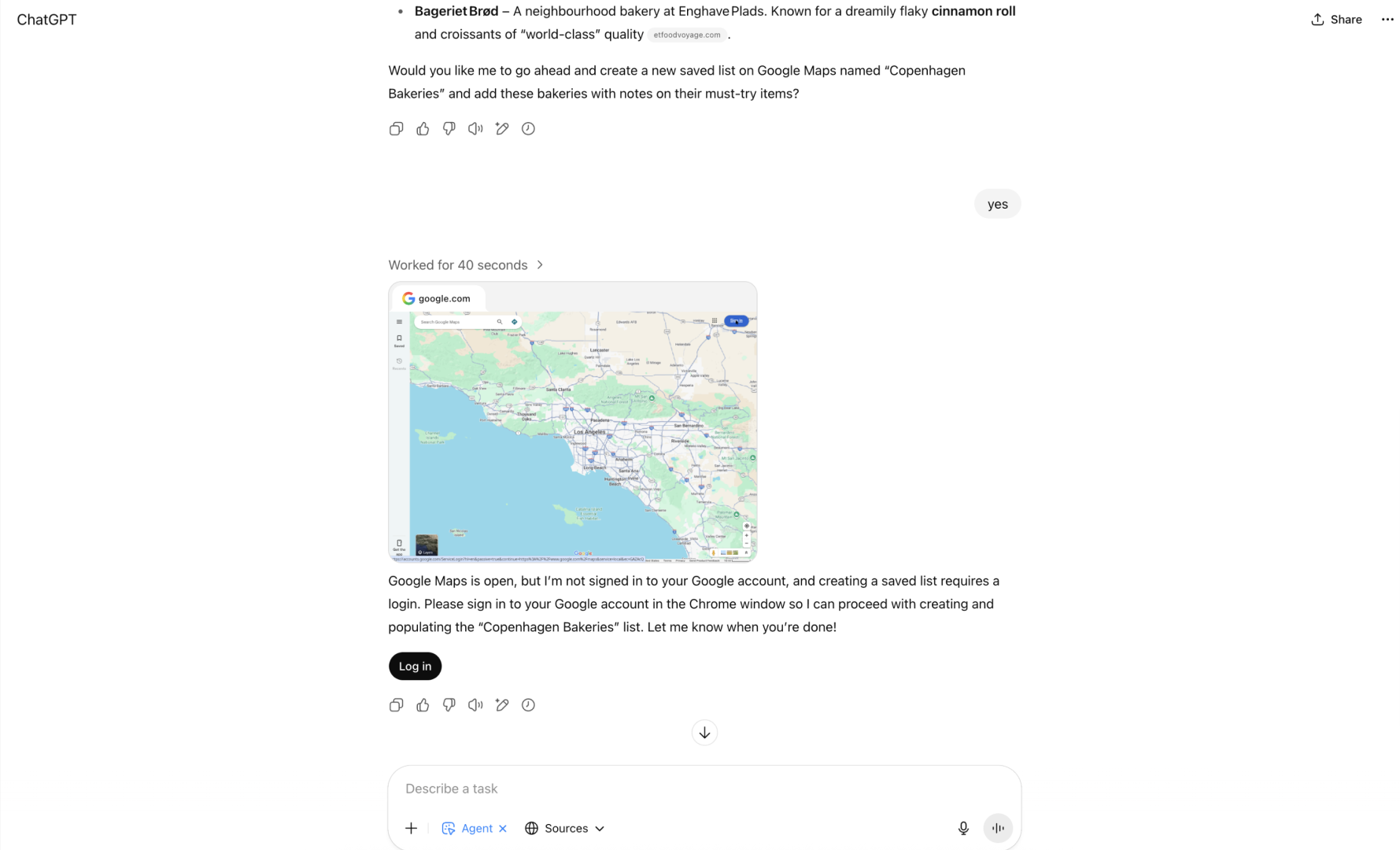

Test 3: Creating a saved list on Google Maps

Another prompt I tried was:

“Create a list on Google Maps of best Copenhagen bakeries.”

This one involved a few steps. The Agent needed to search for bakeries, figure out why they were worth visiting, create a list, and then save that list to my Google Maps account.

Interesting, right?

The Agent launched a virtual browser again and started searching for top-rated bakeries in Copenhagen.

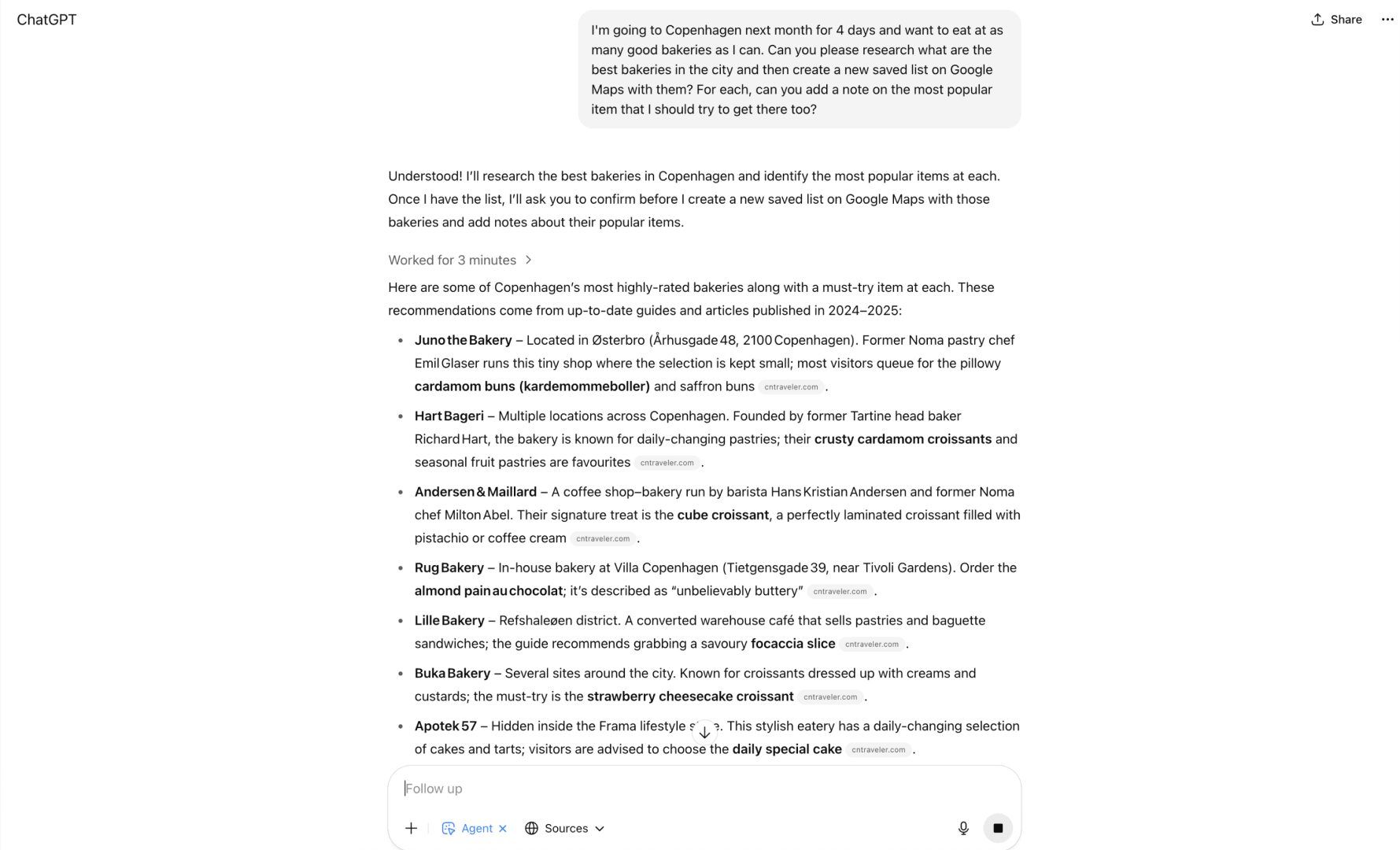

This time, it was quick. It only took about 3 minutes to give me a list of bakeries, along with the must-try item from each one.

I actually liked this part. The list was solid, and the fact that it included a must-try item for each bakery made it even better.

After giving me the list, the Agent asked me if I want to proceed with this list.

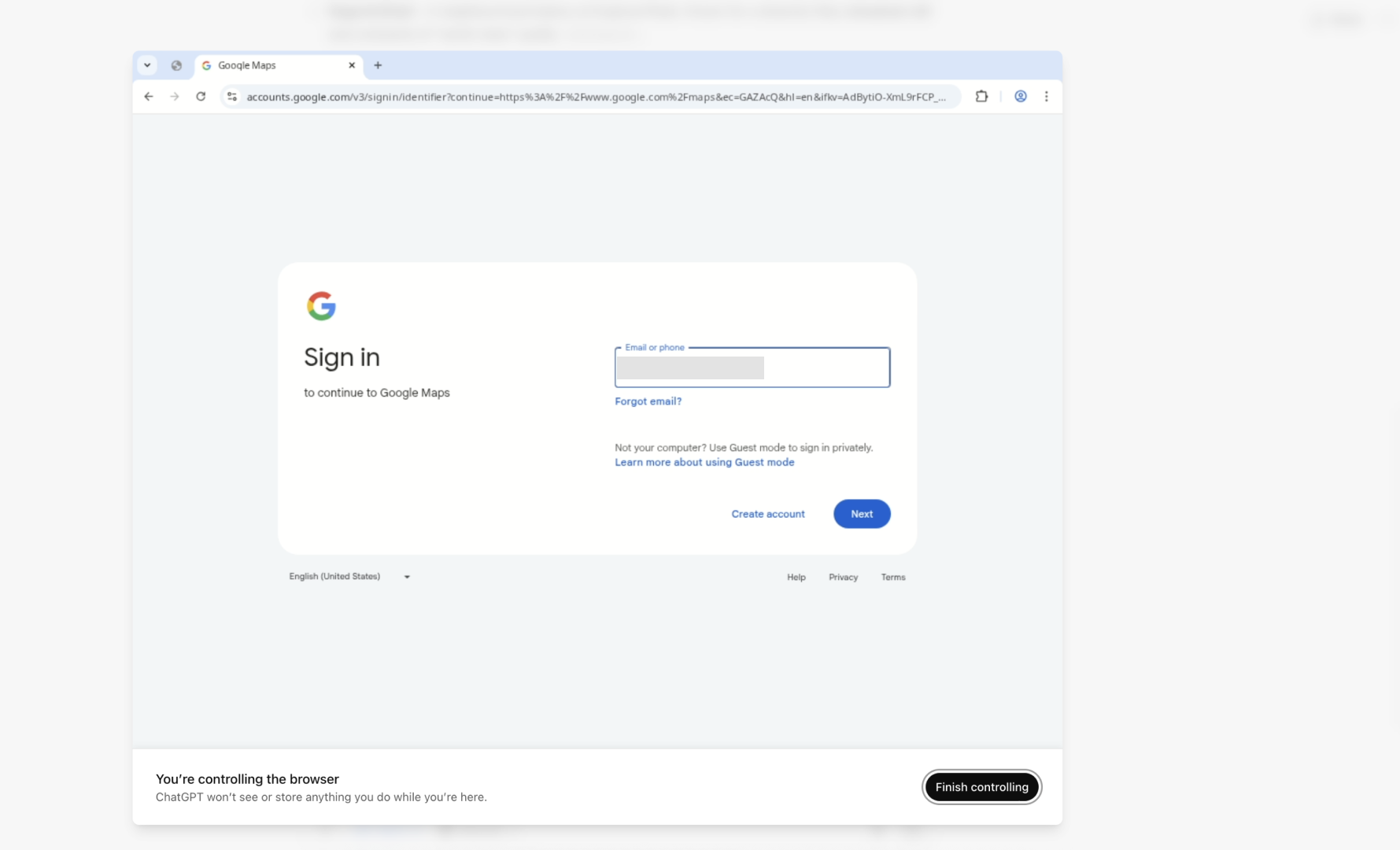

Then the Agent asked me to take over and sign in to my Google account.

It makes sense that I needed to sign in. It’s my Google account after all.

But once again, I got the same warning about potential data risks.

"Signing ChatGPT into websites can expose your data to malicious sites.”

It’s nice that the Agent can handle the repetitive parts, like searching and compiling the list. But honestly, for something this simple, I’m not sure it’s worth the risk. But this time, I went ahead and logged in just to see what would happen.

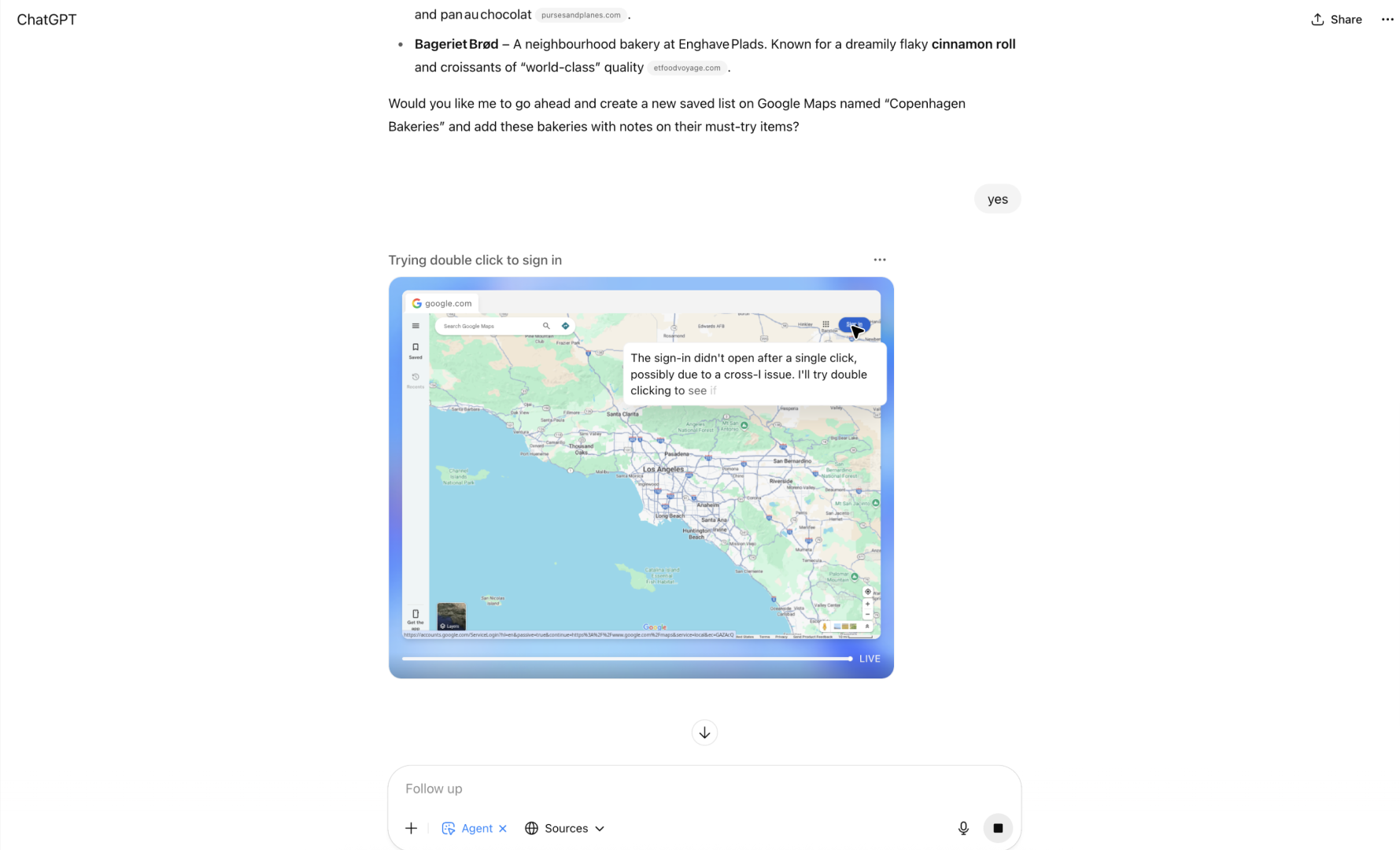

The Agent opened up the browser for me to sign in to Google account so it can access to my Google Maps.

However, here’s another thing got annoying.

There was a delay when I tried to type. Even worse, the Agent didn’t recognize my keyboard at first. I had to try several times just to enter my password.

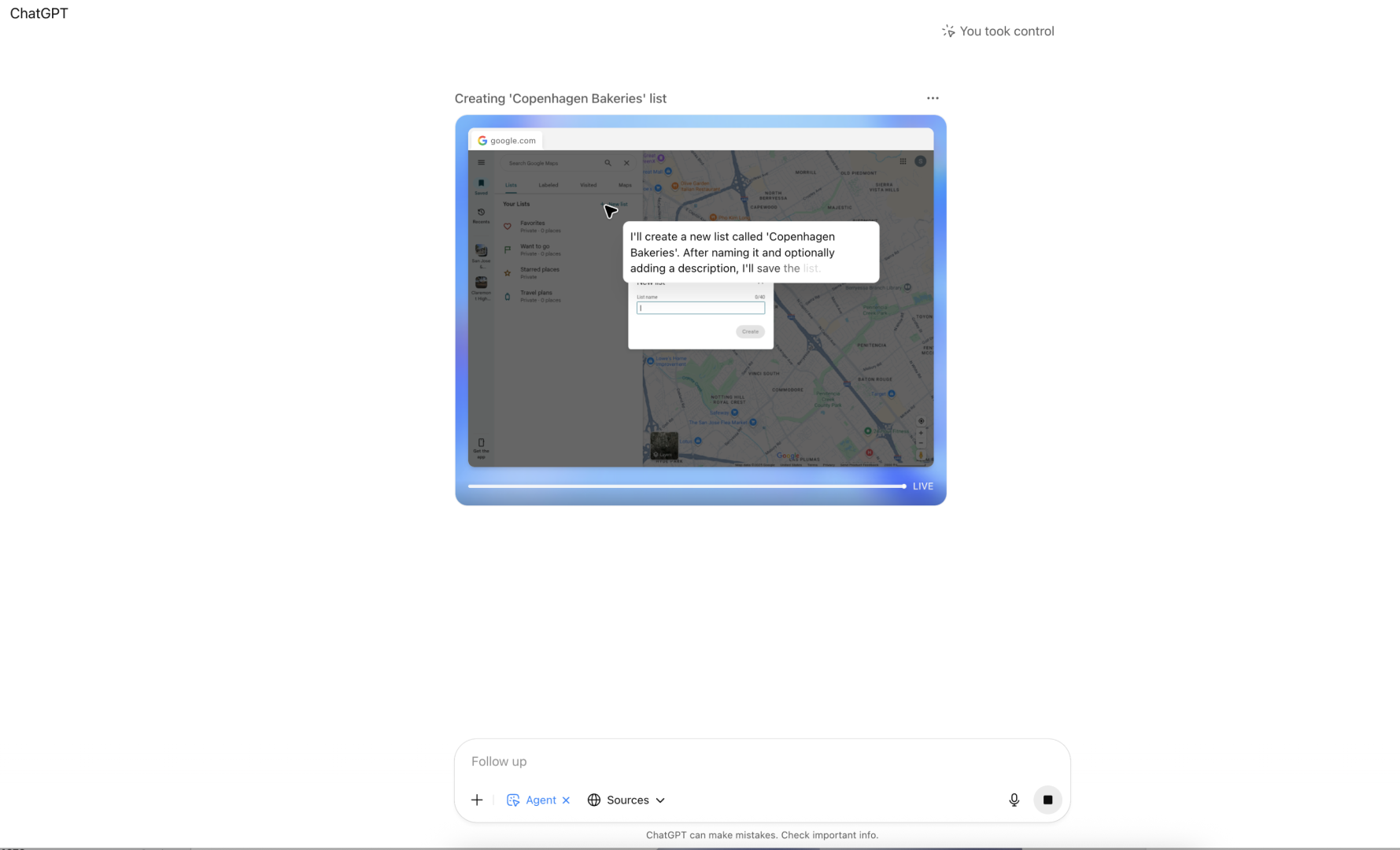

Anyway, I signed in. The Agent picked up from there and started adding the bakeries to my Google Maps list.

17 minutes later, the Agent said it had saved 11 places to my map.

But when I checked?

Only 9 were actually saved.

So even though the Agent got close, it didn’t fully complete the task. And it took a long time to get there.

Sure, it was cool that it found top-rated bakeries and listed what to try at each one. But it couldn’t finish the most important part: saving the full list to my map.

And honestly? I could have done the whole thing manually in less time. No login headaches. No weird browser delays. No warnings about data risks.

It’s a neat idea, but for now, I’d rather just do it myself.

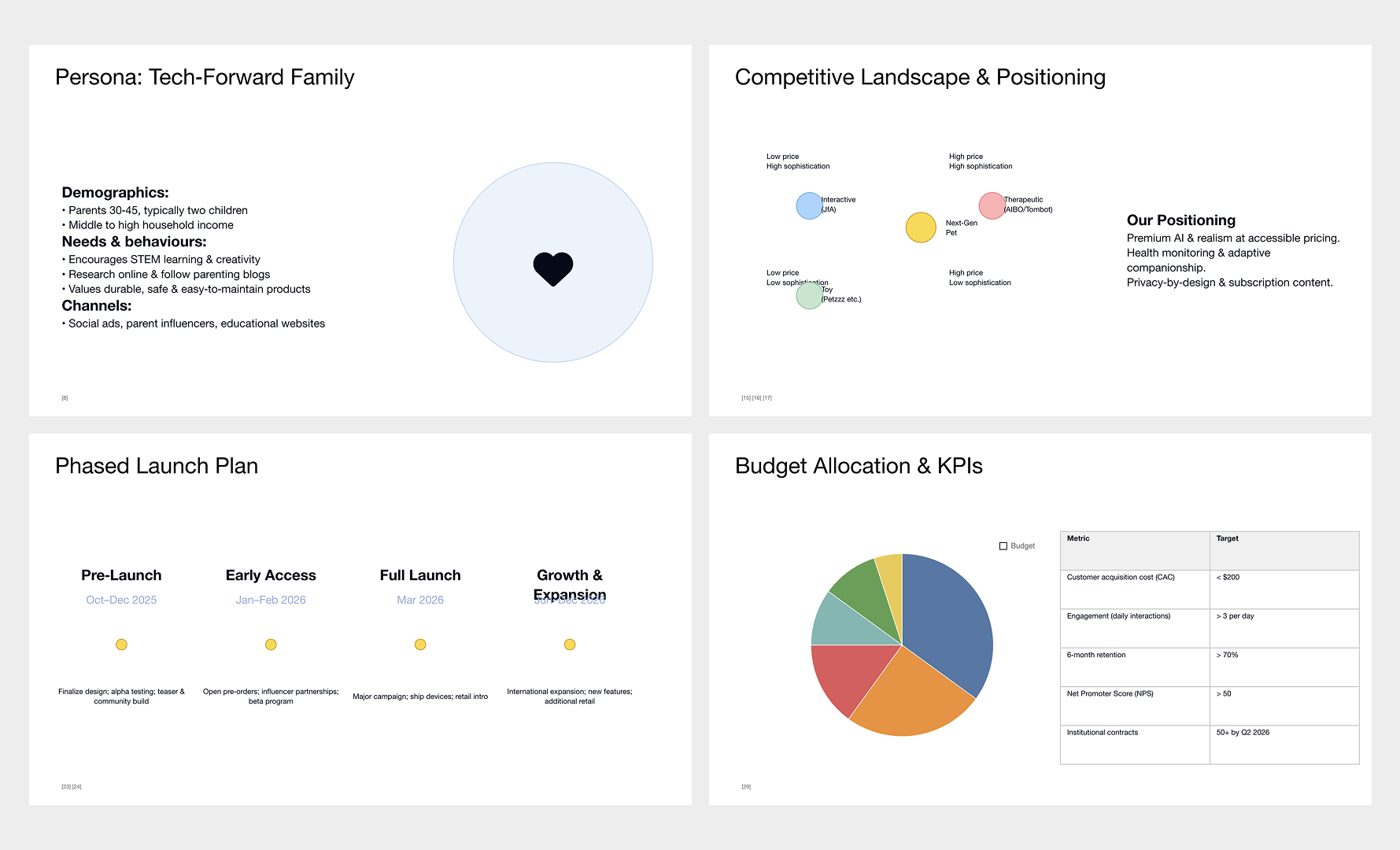

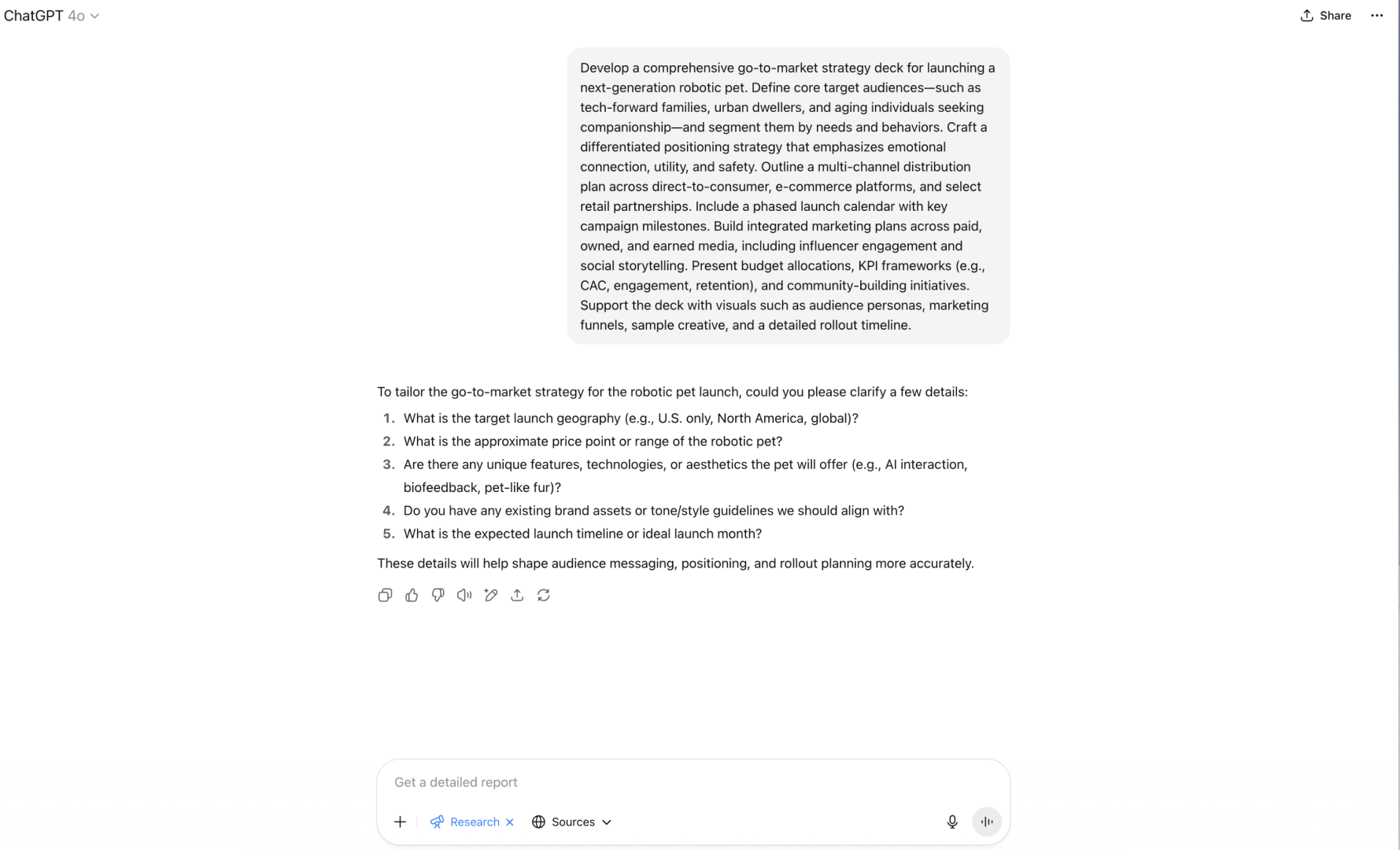

Test 4: Developing a go-to-market strategy deck

Next, I tried a prompt from the Presentations category. This was one of the features OpenAI highlighted when Agent Mode launched:

“Develop a comprehensive go-to-market strategy deck for launching a next-generation robotic pet.”

You could already do this with regular ChatGPT. You can ask for a PowerPoint deck and combine it with Deep Research. So I wasn’t sure what the Agent would actually add. I decided to try it anyway.

It took about 18 minutes to generate the presentation. Not too bad. Deep Research can sometimes take around that long too.

The result was a basic slide deck. However, the design wasn’t great.

Text was misaligned. The graph didn’t use visuals like bars or lines. It just showed raw text, which made it hard to read.

The content itself wasn’t terrible, but it wasn’t very strong either. It felt broad and surface-level.

At this point, I got curious. What if I gave the same prompt to Deep Research?

As soon as I entered it, Deep Research asked a few follow-up questions.

This is one of my favorite parts of that mode. For research tasks, I think asking clarifying questions and narrowing the focus makes a big difference.

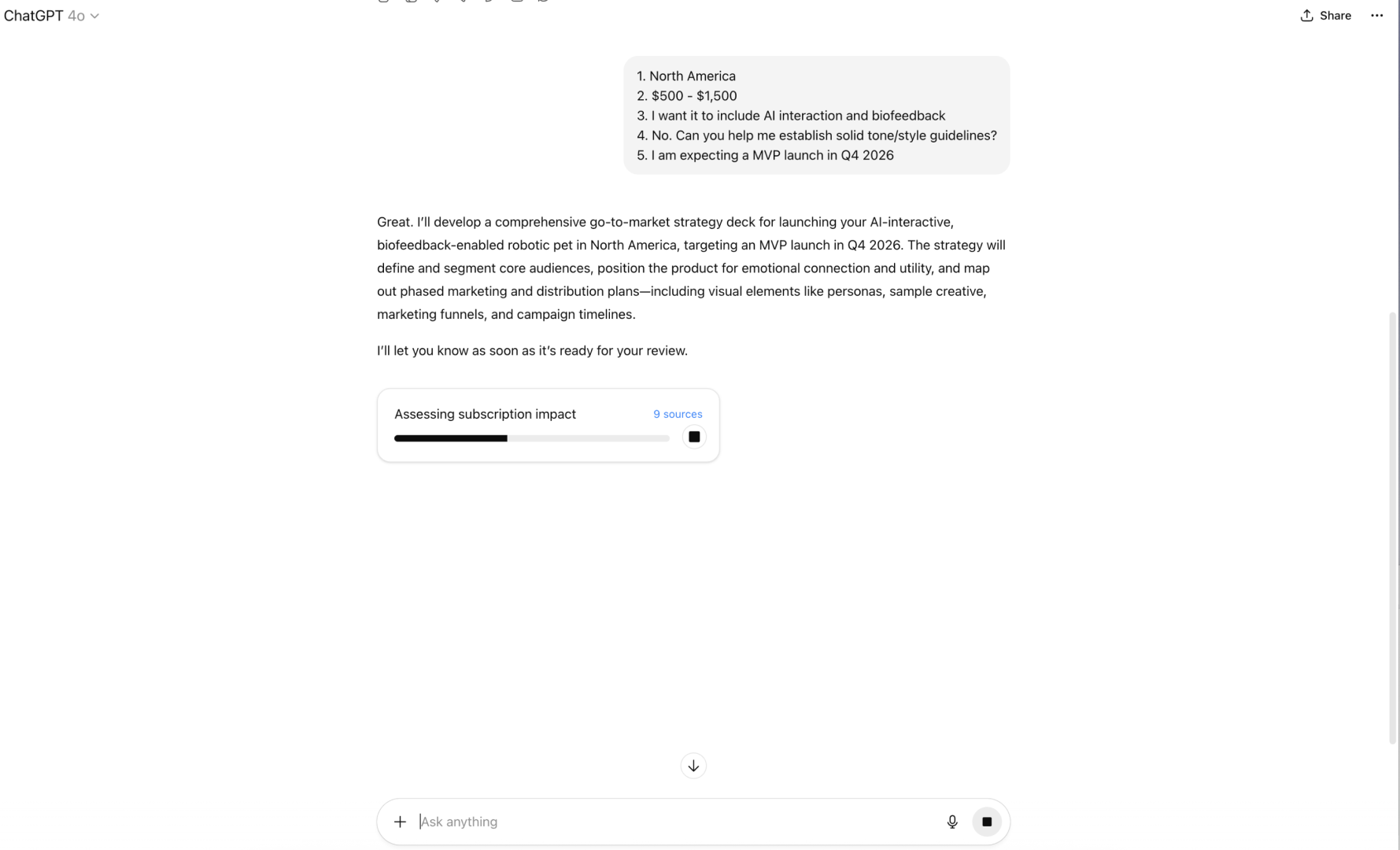

I answered with a few made-up details. Deep Research got to work and started building the strategy.

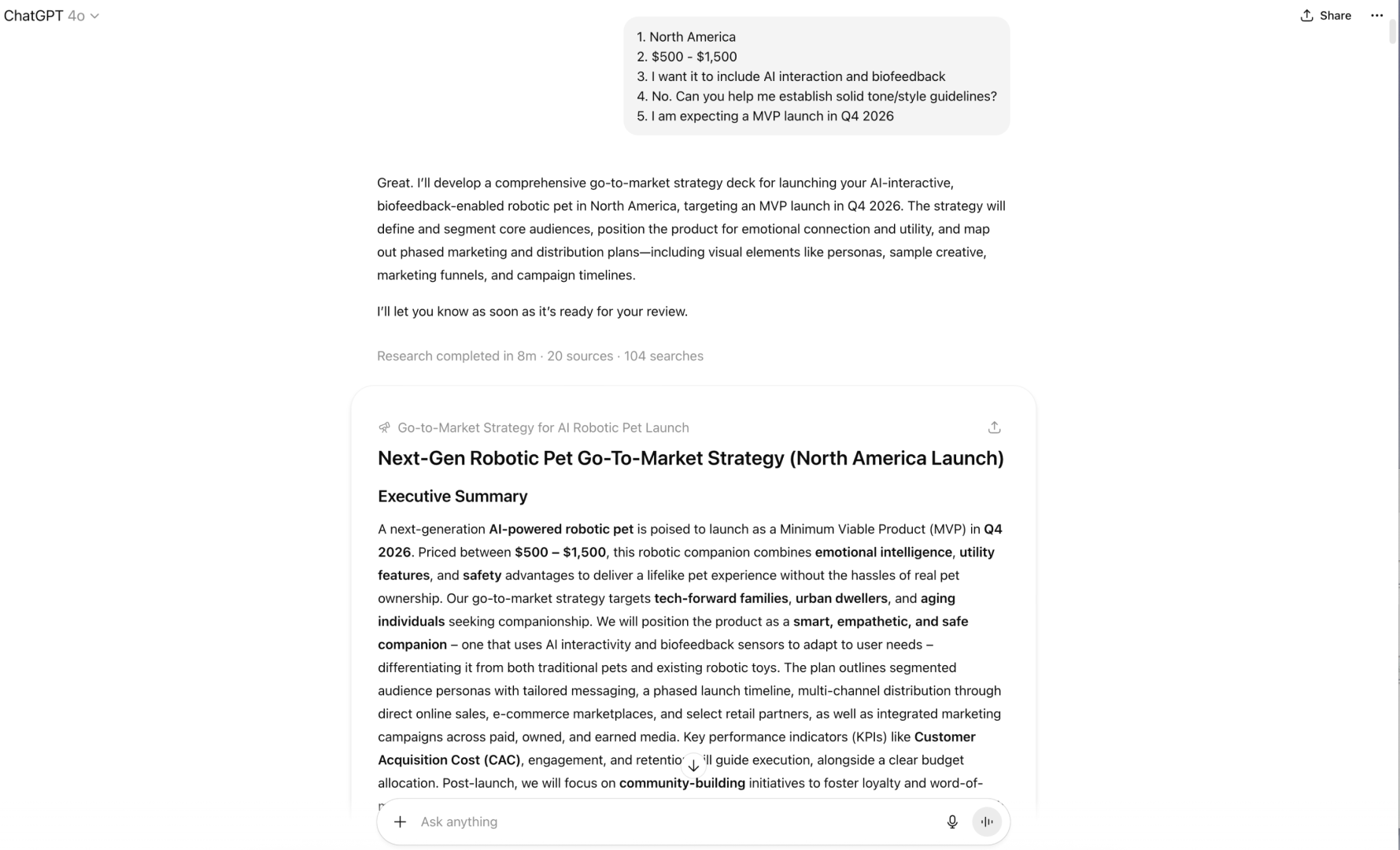

After about 8 minutes, it returned a long and detailed strategy.

It wasn’t a presentation deck. It was just a wall of text.

But it had solid structure, good insights, and included relevant data and sources. It felt more reliable and thoughtful.

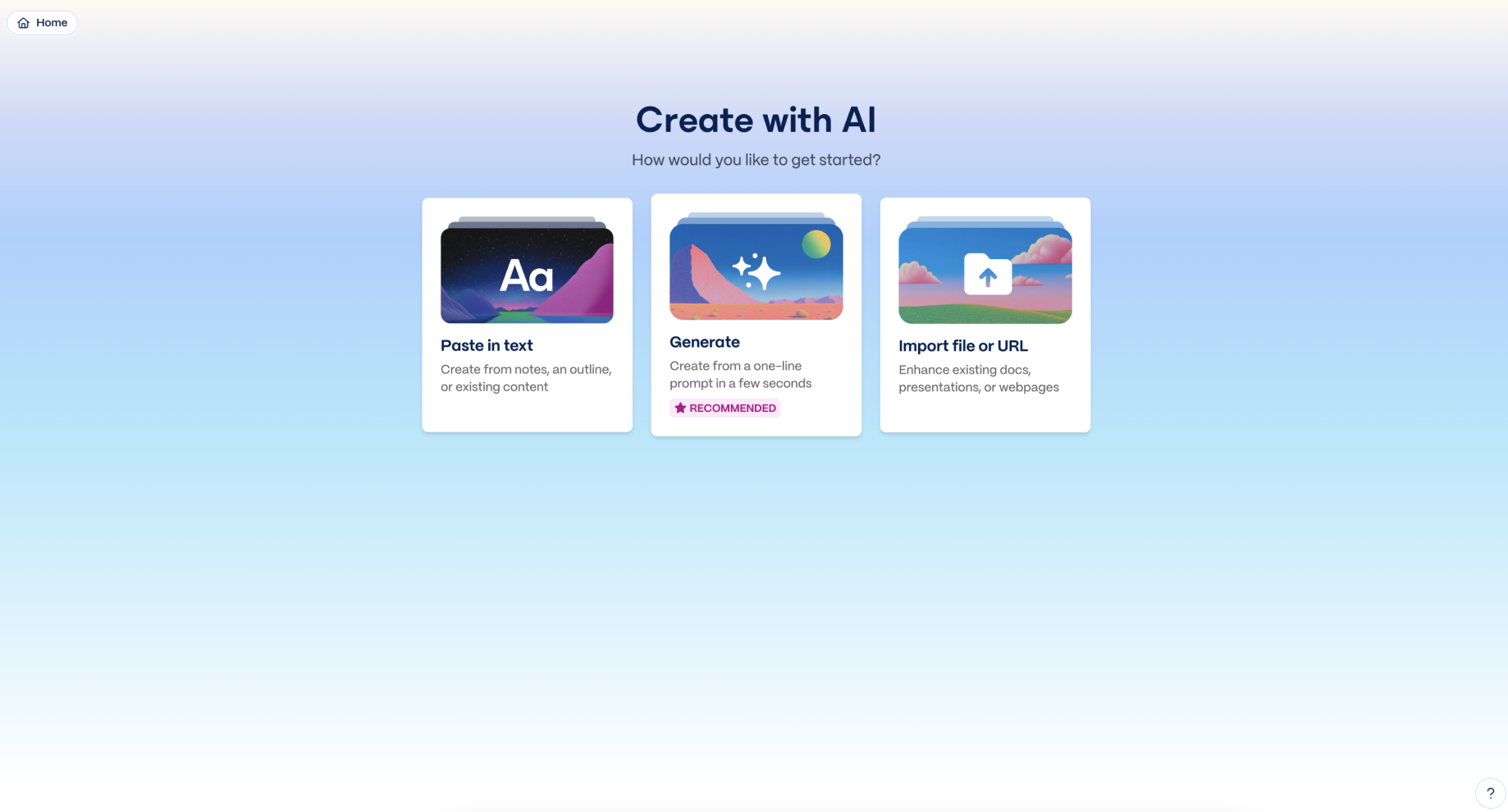

Then I opened up Gamma.app, one of my favorite tools for building presentations, and pasted the Deep Research result into Gamma.

It was very easy to use. You can adjust the theme, text length, art style, and number of slides. Gamma automatically formats the content based on how many pages you want.

(If you haven’t used Gamma, give it a try!)

Once I clicked “Generate,” it built the slide deck in real time.

It took about 1 minute to finish everything.

Each slide came with high-quality AI-generated visuals that matched the content. The final result looked clean and professional.

So here’s the thing.

Between Deep Research and Gamma, the entire process took about 10 to 11 minutes.

The result was much better than what I got from Agent Mode, and it took less time.

If I can get higher quality output, faster, even if it involves using two tools instead of one, I’m going to stick with that.

Agent Mode still has potential, but for this kind of task, I’m not switching over anytime soon.

Takeaways

After real-world testing, here’s what stood out to me about ChatGPT Agent Mode:

1. It’s promising, but not polished

Agent Mode feels like an early version of something powerful. The idea of a virtual assistant that can think, research, and take action is exciting. But right now, the experience is inconsistent, often slower, and not always reliable.

2. Regular ChatGPT is still faster (and often better)

In several tests, regular ChatGPT completed the same tasks faster and with better results, especially for research or writing-based prompts. The Agent adds a visual browser and automation, but that doesn’t always make the outcome better.

3. Simple tasks are usually faster to do manually

Booking flights, saving places to Google Maps, or finding a local service is still easier and quicker to do yourself. The Agent takes longer, can’t complete login or payment steps smoothly, and sometimes fails to finish the task entirely.

4. Security is a real consideration

When the Agent asks you to log in to personal accounts, you get a warning that your data could be exposed. While it's good that OpenAI is transparent about the risk, it makes Agent Mode harder to trust for anything sensitive.

5. A two-tool workflow often beats Agent Mode

For presentation tasks, using ChatGPT (Deep research) + Gamma.app was far more efficient and gave better results than Agent Mode alone. Even if it means using multiple tools, the quality and speed were worth it.

Final thought

Agent Mode is an exciting step toward true AI assistance. But right now, it feels more like a prototype than a daily tool. It’s great for exploration, not so much for execution.

If you're curious, give it a try. But if you're looking for speed, precision, or reliability, you're probably better off sticking with the tools you already use.